An excursion through the Cauchy-Schwarz Inequality

We sure don’t need yet another Cauchy-Schwarz Inequality post, we already have wikipedia, brilliant and several posts in Math Stack Exchange. I will however continue this post as if this current line was never written.

Real Vector Space Case

The statement for Cauchy-Schwarz Inequality is simple: For all $x,y \in \mathbb{R}^n$ we have $\left< x,y\right> \leq \left | x \right | \left | y \right |$.

As you visit a book on real analysis, in my case it was the first time I encountered this problem was reading Pugh’s book.

Let’s remember the definition of the inner product for euclidean vector spaces in $\mathbb{R}$.

$$ \begin{equation} \left< x,y\right> = \sum_{i=1}^{n}x_{i}y_{i} \end{equation} $$

Pugh’s proof is as follows: for any two vectors $x$ and $y$, consider the new vector $w=x+ty$, where $t\in \mathbb{R}$ is a varying scalar. Then

$$ \begin{align} \begin{split} Q(t) & = \left < w,w \right > = \left < x+ty, x+ty \right > \\ & = \left <x,x \right > + 2t \left < x, y \right >+t^2\left <y,y \right > \\ & = a + b\,t +c\,t^2 \end{split} \end{align} $$

The terms $Q(t)=\left <w,w \right >$, $a=\left < x, x \right >$ and $c=\left < y, y \right >$ are strictly non-negative by (1). Look at the following image.

We just said the $Q(t)$ is strictly non-negative, thus, the most-right part of the image is impossible. Thus, we have only the left and middle choice. Combining the equality and inequality of the discriminant we get $b^2-4ac \leq 0$. A little expansion and we get.

$$ \begin{align*} \begin{split} b^2-4ac \leq 0 \\ b^2 \leq 4ac \\ (2\left < x, y \right >)^2 \leq 4\left <x,x \right >\left <y,y \right > &\\ 4\left < x, y \right >^2 \leq 4\left |x \right |^2\left |y \right|^2 & \\ \left < x, y \right >^2 \leq \left |x \right |^2\left |y \right|^2 & \\ \sqrt{\left < x, y \right >^2} \leq \sqrt{\left |x \right |^2\left |y \right|^2} &\\ \left < x, y \right > \leq \left |x \right |\left |y \right| & \quad \square \\ \end{split} \end{align*} $$

That finalizes the proof in the real vector space.

Complex Vector Space Case

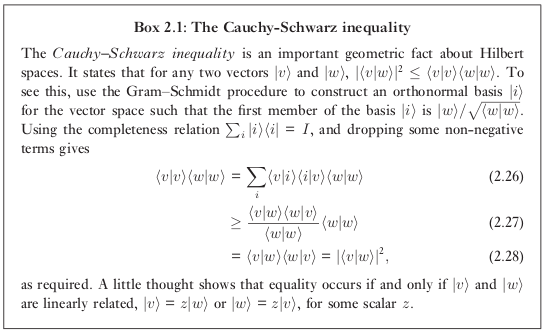

Some time passed after I learned Pugh’s proof. At some point I was reading Quantum Computation and Quantum Information. There was Box 2.1. I was following the book and then I realized I didn’t understand the demonstration. I was also thinking, but I already know the demonstration for Cauchy-Schwarz, so, why is this different? So I realized that the previous demonstration does not work in the complex plane. I starting looking to several quantum mechanics books and I ended up with the following proof (also in Wikipedia).

First, the definition of the dot product is almost the same, however the left term in the $\left < \cdot , \cdot\right >$ will be conjugated, so, it’s as follows.

$$ \begin{equation} \left< x,y\right> = \sum_{i=1}^{n}\bar{x_{i}}y_{i} \end{equation} $$

Also the statement for Cauchy-Schwarz will be as follows: For all $\mathbf{u},\mathbf{v} \in \mathbb{C}^n$ we have $\left< \mathbf{u},\mathbf{v}\right> \leq \left | \mathbf{u} \right | \left | \mathbf{v} \right |$.

As you can see, we only replaced $\mathbb{R}$ with $\mathbb{C}$, also we replaced $x$ with $\mathbf{u}$ and $y$ with $\mathbf{v}$ to be inline with bibliography. Anyway, let’s see first the trivial case where $\mathbf{v}=\mathbf{0}$ (the zero vector).

$$ \left < \mathbf{u} , \mathbf{0} \right > = 0\\ $$

By equation (3) we can see that the zero vector multiplies every element and the result is zero, thus $\left< \mathbf{u},\mathbf{v}\right> \leq \left | \mathbf{u} \right | \left | \mathbf{v} \right |$ is respected, or in fact $\left< \mathbf{u},\mathbf{0}\right> = \left | \mathbf{u} \right | \left | \mathbf{0} \right |=0$.

Now, in order to see the case $\mathbf{v} \ne \mathbf{0}$ we defined first a variable $\mathbf{z}$ as follows.

$$ \mathbf {z} :=\mathbf {u} -{\frac {\langle \mathbf {u} ,\mathbf {v} \rangle }{\langle \mathbf {v} ,\mathbf {v} \rangle }}\mathbf {v} $$

The part $-{\frac {\langle \mathbf {u} ,\mathbf {v} \rangle }{\langle \mathbf {v} ,\mathbf {v} \rangle }}\mathbf {v}$ removes the magnitude of $\mathbf{u}$ in the direction $\mathbf{v}$. A bit more about vector projection in this link. Thus, we have $\mathbf{z}$ and $\mathbf{v}$ orthogonal to each other. We can proof orthogonality as follows.

$$ \begin{align} \begin{split} \left < \mathbf{v} , \mathbf{z} \right > &= \left < \mathbf{v} , \mathbf {u} -\frac {\langle \mathbf {u} ,\mathbf {v} \rangle }{\langle \mathbf {v} ,\mathbf {v} \rangle }\mathbf {v} \right >\\ & = \left < \mathbf{u} , \mathbf{v} \right > -\frac {\langle \mathbf {u} ,\mathbf {v} \rangle }{\langle \mathbf {v} ,\mathbf {v} \rangle }\left < \mathbf {v}, \mathbf {v} \right > \\ & = \left < \mathbf{u} , \mathbf{v} \right > -\left < \mathbf {u}, \mathbf {v} \right > = 0 \\ \end{split} \end{align} $$

Now, by the definition of $\mathbf{z}$, we know that.

$$ \mathbf {u} ={\frac {\langle \mathbf {u} ,\mathbf {v} \rangle }{\langle \mathbf {v} ,\mathbf {v} \rangle }}\mathbf {v} +\mathbf {z} = \alpha \mathbf {v} +\mathbf {z} $$

Where $\alpha={\frac {\langle \mathbf {u} ,\mathbf {v} \rangle }{\langle \mathbf {v} ,\mathbf {v} \rangle }}$. Thus, $$ \begin{align*} \begin{split} \left < \mathbf{u}, \mathbf{u} \right > &= \left < {\alpha}\mathbf {v} +\mathbf {z} , {\alpha}\mathbf {v} +\mathbf {z} \right > \\ & = \left < \alpha \mathbf{v}, \alpha \mathbf{v} \right > + \underbrace{\left < \alpha \mathbf{v} , \mathbf{z} \right >+ \left < \mathbf{z}, \alpha \mathbf{v} \right >}_{\text{orthogonal by eq. (4)}} +\langle \mathbf{z},\mathbf{z}\rangle\\ & = \left < \alpha \mathbf{v}, \alpha \mathbf{v} \right > +\langle \mathbf{z},\mathbf{z}\rangle = \bar{\alpha}\alpha \langle \mathbf{v},\mathbf{v} \rangle +\langle \mathbf{z},\mathbf{z}\rangle \\ \end{split} \end{align*} $$

Let’s now replace the last equation with the values of $\alpha$ and multiply by $\langle \mathbf{v}, \mathbf{v} \rangle$, also for notation $\bar{x}x=x^2$. $$ \begin{align*} \begin{split} \langle \mathbf{u}, \mathbf{u} \rangle & = \bar{\alpha}\alpha \langle \mathbf{v},\mathbf{v} \rangle +\langle \mathbf{z},\mathbf{z}\rangle = \alpha^2 \langle \mathbf{v},\mathbf{v} \rangle +\langle \mathbf{z},\mathbf{z}\rangle \\ & = \frac {\langle \mathbf {u} ,\mathbf {v} \rangle^2 }{\langle \mathbf {v} ,\mathbf {v} \rangle^2 } \langle \mathbf{v},\mathbf{v} \rangle +\langle \mathbf{z},\mathbf{z}\rangle \\ \langle \mathbf{v}, \mathbf{v} \rangle \langle \mathbf{u}, \mathbf{u} \rangle &= \frac {\langle \mathbf {u} ,\mathbf {v} \rangle^2 }{\langle \mathbf {v} ,\mathbf {v} \rangle^2 } \langle \mathbf{v},\mathbf{v} \rangle^2 +\langle \mathbf{z},\mathbf{z}\rangle \langle \mathbf{v}, \mathbf{v} \rangle \\ &= \langle \mathbf {u} ,\mathbf {v} \rangle^2 +\langle \mathbf{z},\mathbf{z}\rangle \langle \mathbf{v}, \mathbf{v} \rangle \\ & \geq \langle \mathbf {u} ,\mathbf {v} \rangle^2 \end{split} \end{align*} $$

The inequality appears because the term $\langle \mathbf{z},\mathbf{z}\rangle \langle \mathbf{v}, \mathbf{v} \rangle$ is strictly non-negative (because it’s the dot product of itself). Finally, to keep the form equal, we swap the values of the equation and do square root to obtain the inequality.

$$ \begin{align*} \begin{split} \langle \mathbf {u} ,\mathbf {v} \rangle^2 \leq \langle \mathbf{v}, \mathbf{v} \rangle \langle \mathbf{u}, \mathbf{u} \rangle & \\ \sqrt{\langle \mathbf {u} ,\mathbf {v} \rangle^2} \leq \sqrt{\langle \mathbf{v}, \mathbf{v} \rangle \langle \mathbf{u}, \mathbf{u} \rangle } &\\ \langle \mathbf {u} ,\mathbf {v} \rangle \leq | \mathbf{v}| | \mathbf{u} | & \quad \square \\ \end{split} \end{align*} $$

Complex Vector Space with a Twist

Previously I mentioned Box 2.1 from the book Quantum Computation and Quantum Information. Here it’s in the following picture.

The step from equation (2.26) to equation (2.27) is what took me some time to understand. Replacing the first term $|i \rangle = |w\rangle / \sqrt{\langle w | w \rangle}$ and the other terms let’s call them $|j \rangle$. Thus, in between both equations we could say that there is.

$$ \langle v | v \rangle \langle w | w \rangle = \frac{\langle v | w \rangle \langle w | v \rangle}{\langle w | w \rangle}\langle w | w \rangle+ \underbrace{\sum_j \langle v | j \rangle \langle j | v \rangle \langle w | w \rangle}_{\substack{\text{non negative terms} \\ |j\rangle \text{ orthogonal to } |w\rangle}} $$

Thus, the completeness relation is as follows

$$ |w \rangle \langle w |+ \sum_j |j \rangle \langle j | = I $$

Finally, I would like to draw the attention that the applications of Cauchy-Schwarz are varied, one of the most notorious is the Heisenberg’s Uncertainty Principle. Also, triangle inequality can be derived from Cauchy-Schwarz.